Key takeaways:

- Ceph works well as IOPS-intensive cloud storage for MySQL and similar databases.

- LAMP is the 1st majority application for OpenStack, MySQL on Ceph provides lower TCO than AWS

- MySQL performance on SSD-backed Ceph(RBD + filestore based) looks quite good.

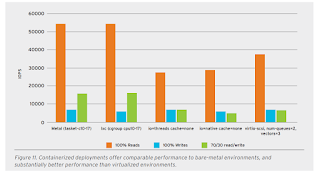

- For read, containerized MySQL applications has some performance parity with baremetal based setup, and is much better VM based setup

- For write, performance is almost the same on 3 different setup(bottleneck on filestore?). Tunings on QEMU IO part does not help on the write part.

Some detail notes:

· Diverse organizations are now looking to model the very successful public cloud Database-as-a-Service (DaaS) experiences with private or hybrid clouds — using their own hardware and resources.

· Any storage for MySQL must provide low latency IOPS throughput

· People can choose SSD-backed Ceph RBD at a price point that is even more favorable than public cloud offerings

· Cinder driver trends: RBD is the default reference driver since Apr 2016, along with LVM

· Pros and cons for different configurations of MySQL deployment

· Hardware configuration for OSD server:

o networking: 10Gb

o memory: 32GB

o CPU: E5-2650 V4

o OSD storage: P3700 800GB x 1

o OS storage: S3510 80GB Raid1

· Architecture consideration for MySQL on Ceph

· Public cloud performance (SSD)

· Tests showed one NVMe disk per 10 cores can provide ideal level of performance

· Ceph provides much lower TCO than AWS

· Testing Env

o 5x OSD nodes

§ 2x E5-2650 v3(10 core)

§ 32GB memory

§ 2x 80GB OS storage

§ 4x P3700 (800GB) for OSD storage

§ 10GB nic x2

§ 8x 6TB SAS(not used?)

o 3x monitors

§ 2x E5-2630 v3(8 core)

§ 64GB mem

§ 2x 80GB for OS storage

§ Internal P3700(800GB)

§ 10Gb X 2

o 12x Clients

§ 2x E5-2670 v2

§ 64GB mem

o Software tuning/config:

§ sysbench

§ percona MySQL server

· buffer pool: 20% of datasheet(12.8G?)

· flushing: innodb_flush_log_at_trx_commit = 0

· parallel double write buffer: based on percona tests, the performance is better than community mysql (https://www.percona.com/blog/

§ Ceph:

· network stack:

o net.ipv4.ip_forward=1

net.core.wmem_max=125829120

net.core.rmem_max=125829120

net.ipv4.tcp_rmem= 10240 87380 125829120

net.ipv4.tcp_wmem= 10240 87380 125829120

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_sack = 1

net.core.netdev_max_backlog = 10000

vm.swappiness=1

net.core.wmem_max=125829120

net.core.rmem_max=125829120

net.ipv4.tcp_rmem= 10240 87380 125829120

net.ipv4.tcp_wmem= 10240 87380 125829120

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_timestamps = 1

net.ipv4.tcp_sack = 1

net.core.netdev_max_backlog = 10000

vm.swappiness=1

· filestore:

o flestore_xattr_use_omap = true

flestore_wbthrottle_enable = false

flestore_queue_max_byes = 1048576000

flestore_queue_committing_max_

flestore_queue_max_ops = 5000

flestore_queue_committing_max_

flestore_max_sync_interval = 10

flestore_fd_cache_size = 64

flestore_fd_cache_shards = 32

flestore_op_threads = 6

flestore_wbthrottle_enable = false

flestore_queue_max_byes = 1048576000

flestore_queue_committing_max_

flestore_queue_max_ops = 5000

flestore_queue_committing_max_

flestore_max_sync_interval = 10

flestore_fd_cache_size = 64

flestore_fd_cache_shards = 32

flestore_op_threads = 6

· Journal:

o journal_max_write_entries = 1000

journal_queue_max_ops = 3000

journal_max_write_bytes = 1048576000

journal_queue_max_bytes = 1048576000

journal_queue_max_ops = 3000

journal_max_write_bytes = 1048576000

journal_queue_max_bytes = 1048576000

· MISC:

o # Op tracker

osd_enable_op_tracker = false

# OSD Client

osd_client_message_size_cap = 0

osd_client_message_cap = 0

# Objector

objecter_inflight_ops = 102400

objector_inflight_op_bytes = 1048576000

# Throttles

ms_dispatch_throttle_bytes = 1048576000

# OSD Threads

osd_op_threads = 32

osd_op_num_shards = 5

osd_op_num_threads_per_shard = 2

osd_enable_op_tracker = false

# OSD Client

osd_client_message_size_cap = 0

osd_client_message_cap = 0

# Objector

objecter_inflight_ops = 102400

objector_inflight_op_bytes = 1048576000

# Throttles

ms_dispatch_throttle_bytes = 1048576000

# OSD Threads

osd_op_threads = 32

osd_op_num_shards = 5

osd_op_num_threads_per_shard = 2

· Testing results:

o Performance comparision of mysql on

§ baremetal + KRBD

§ containers + KRBD

§ VM + qemu rbd + various qemu IO settings(QEMU event loop optimization across multiple VMs: see https://github.com/qemu/qemu/

Thanks, -yuan

No comments:

Post a Comment